Multiple Exceptions (user mode) - Modeling Example

Multiple Exceptions (user mode) - Modeling Example Multiple Exceptions (kernel mode)

Multiple Exceptions (kernel mode) Multiple Exceptions (managed space)

Multiple Exceptions (managed space)- Multiple Exceptions (stowed)

Dynamic Memory Corruption (process heap)

Dynamic Memory Corruption (process heap) Dynamic Memory Corruption (kernel pool)

Dynamic Memory Corruption (kernel pool)- Dynamic Memory Corruption (managed heap)

False Positive Dump

False Positive Dump Lateral Damage (general)

Lateral Damage (general)- Lateral Damage (CPU mode)

Optimized Code (function parameter reuse)

Optimized Code (function parameter reuse) Invalid Pointer (general)

Invalid Pointer (general)- Invalid Pointer (objects)

NULL Pointer (code)

NULL Pointer (code) NULL Pointer (data)

NULL Pointer (data) Inconsistent Dump

Inconsistent Dump Hidden Exception (user space)

Hidden Exception (user space)- Hidden Exception (kernel space)

- Hidden Exception (managed space)

Deadlock (critical sections)

Deadlock (critical sections) Deadlock (executive resources)

Deadlock (executive resources) Deadlock (mixed objects, user space)

Deadlock (mixed objects, user space) Deadlock (LPC)

Deadlock (LPC) Deadlock (mixed objects, kernel space)

Deadlock (mixed objects, kernel space) Deadlock (self)

Deadlock (self)- Deadlock (managed space)

- Deadlock (.NET Finalizer)

Changed Environment

Changed Environment Incorrect Stack Trace

Incorrect Stack Trace OMAP Code Optimization

OMAP Code Optimization No Component Symbols

No Component Symbols Insufficient Memory (committed memory)

Insufficient Memory (committed memory) Insufficient Memory (handle leak)

Insufficient Memory (handle leak) Insufficient Memory (kernel pool)

Insufficient Memory (kernel pool) Insufficient Memory (PTE)

Insufficient Memory (PTE) Insufficient Memory (module fragmentation)

Insufficient Memory (module fragmentation) Insufficient Memory (physical memory)

Insufficient Memory (physical memory) Insufficient Memory (control blocks)

Insufficient Memory (control blocks)- Insufficient Memory (reserved virtual memory)

- Insufficient Memory (session pool)

- Insufficient Memory (stack trace database)

- Insufficient Memory (region)

- Insufficient Memory (stack)

Spiking Thread

Spiking Thread Module Variety

Module Variety Stack Overflow (kernel mode)

Stack Overflow (kernel mode) Stack Overflow (user mode)

Stack Overflow (user mode) Stack Overflow (software implementation)

Stack Overflow (software implementation)- Stack Overflow (insufficient memory)

- Stack Overflow (managed space)

Managed Code Exception

Managed Code Exception- Managed Code Exception (Scala)

- Managed Code Exception (Python)

Truncated Dump

Truncated Dump Waiting Thread Time (kernel dumps)

Waiting Thread Time (kernel dumps) Waiting Thread Time (user dumps)

Waiting Thread Time (user dumps) Memory Leak (process heap) - Modeling Example

Memory Leak (process heap) - Modeling Example Memory Leak (.NET heap)

Memory Leak (.NET heap)- Memory Leak (page tables)

- Memory Leak (I/O completion packets)

- Memory Leak (regions)

Missing Thread (user space)

Missing Thread (user space)- Missing Thread (kernel space)

Unknown Component

Unknown Component Double Free (process heap)

Double Free (process heap) Double Free (kernel pool)

Double Free (kernel pool) Coincidental Symbolic Information

Coincidental Symbolic Information Stack Trace

Stack Trace- Stack Trace (I/O request)

- Stack Trace (file system filters)

- Stack Trace (database)

- Stack Trace (I/O devices)

Virtualized Process (WOW64)

Virtualized Process (WOW64)- Virtualized Process (ARM64EC and CHPE)

Stack Trace Collection (unmanaged space)

Stack Trace Collection (unmanaged space)- Stack Trace Collection (managed space)

- Stack Trace Collection (predicate)

- Stack Trace Collection (I/O requests)

- Stack Trace Collection (CPUs)

Coupled Processes (strong)

Coupled Processes (strong) Coupled Processes (weak)

Coupled Processes (weak) Coupled Processes (semantics)

Coupled Processes (semantics) High Contention (executive resources)

High Contention (executive resources) High Contention (critical sections)

High Contention (critical sections) High Contention (processors)

High Contention (processors)- High Contention (.NET CLR monitors)

- High Contention (.NET heap)

- High Contention (sockets)

Accidental Lock

Accidental Lock Passive Thread (user space)

Passive Thread (user space) Passive System Thread (kernel space)

Passive System Thread (kernel space) Main Thread

Main Thread Busy System

Busy System Historical Information

Historical Information Object Distribution Anomaly (IRP)

Object Distribution Anomaly (IRP)- Object Distribution Anomaly (.NET heap)

Local Buffer Overflow (user space)

Local Buffer Overflow (user space)- Local Buffer Overflow (kernel space)

Early Crash Dump

Early Crash Dump Hooked Functions (user space)

Hooked Functions (user space) Hooked Functions (kernel space)

Hooked Functions (kernel space)- Hooked Modules

Custom Exception Handler (user space)

Custom Exception Handler (user space) Custom Exception Handler (kernel space)

Custom Exception Handler (kernel space) Special Stack Trace

Special Stack Trace Manual Dump (kernel)

Manual Dump (kernel) Manual Dump (process)

Manual Dump (process) Wait Chain (general)

Wait Chain (general) Wait Chain (critical sections)

Wait Chain (critical sections) Wait Chain (executive resources)

Wait Chain (executive resources) Wait Chain (thread objects)

Wait Chain (thread objects) Wait Chain (LPC/ALPC)

Wait Chain (LPC/ALPC) Wait Chain (process objects)

Wait Chain (process objects) Wait Chain (RPC)

Wait Chain (RPC) Wait Chain (window messaging)

Wait Chain (window messaging) Wait Chain (named pipes)

Wait Chain (named pipes)- Wait Chain (mutex objects)

- Wait Chain (pushlocks)

- Wait Chain (CLR monitors)

- Wait Chain (RTL_RESOURCE)

- Wait Chain (modules)

- Wait Chain (nonstandard synchronization)

- Wait Chain (C++11, condition variable)

- Wait Chain (SRW lock)

Corrupt Dump

Corrupt Dump Dispatch Level Spin

Dispatch Level Spin No Process Dumps

No Process Dumps No System Dumps

No System Dumps Suspended Thread

Suspended Thread Special Process

Special Process Frame Pointer Omission

Frame Pointer Omission False Function Parameters

False Function Parameters Message Box

Message Box Self-Dump

Self-Dump Blocked Thread (software)

Blocked Thread (software) Blocked Thread (hardware)

Blocked Thread (hardware)- Blocked Thread (timeout)

Zombie Processes

Zombie Processes Wild Pointer

Wild Pointer Wild Code

Wild Code Hardware Error

Hardware Error Handle Limit (GDI, kernel space)

Handle Limit (GDI, kernel space)- Handle Limit (GDI, user space)

Missing Component (general)

Missing Component (general) Missing Component (static linking, user mode)

Missing Component (static linking, user mode) Execution Residue (unmanaged space, user)

Execution Residue (unmanaged space, user)- Execution Residue (unmanaged space, kernel)

- Execution Residue (managed space)

Optimized VM Layout

Optimized VM Layout- Invalid Handle (general)

- Invalid Handle (managed space)

- Overaged System

- Thread Starvation (realtime priority)

- Thread Starvation (normal priority)

- Duplicated Module

- Not My Version (software)

- Not My Version (hardware)

- Data Contents Locality

- Nested Exceptions (unmanaged code)

- Nested Exceptions (managed code)

- Affine Thread

- Self-Diagnosis (user mode)

- Self-Diagnosis (kernel mode)

- Self-Diagnosis (registry)

- Inline Function Optimization (unmanaged code)

- Inline Function Optimization (managed code)

- Critical Section Corruption

- Lost Opportunity

- Young System

- Last Error Collection

- Hidden Module

- Data Alignment (page boundary)

- C++ Exception

- Divide by Zero (user mode)

- Divide by Zero (kernel mode)

- Swarm of Shared Locks

- Process Factory

- Paged Out Data

- Semantic Split

- Pass Through Function

- JIT Code (.NET)

- JIT Code (Java)

- Ubiquitous Component (user space)

- Ubiquitous Component (kernel space)

- Nested Offender

- Virtualized System

- Effect Component

- Well-Tested Function

- Mixed Exception

- Random Object

- Missing Process

- Platform-Specific Debugger

- Value Deviation (stack trace)

- Value Deviation (structure field)

- Runtime Thread (CLR)

- Runtime Thread (Python, Linux)

- Coincidental Frames

- Fault Context

- Hardware Activity

- Incorrect Symbolic Information

- Message Hooks - Modeling Example

- Coupled Machines

- Abridged Dump

- Exception Stack Trace

- Distributed Spike

- Instrumentation Information

- Template Module

- Invalid Exception Information

- Shared Buffer Overwrite

- Pervasive System

- Problem Exception Handler

- Same Vendor

- Crash Signature

- Blocked Queue (LPC/ALPC)

- Fat Process Dump

- Invalid Parameter (process heap)

- Invalid Parameter (runtime function)

- String Parameter

- Well-Tested Module

- Embedded Comment

- Hooking Level

- Blocking Module

- Dual Stack Trace

- Environment Hint

- Top Module

- Livelock

- Technology-Specific Subtrace (COM interface invocation)

- Technology-Specific Subtrace (dynamic memory)

- Technology-Specific Subtrace (JIT .NET code)

- Technology-Specific Subtrace (COM client call)

- Dialog Box

- Instrumentation Side Effect

- Semantic Structure (PID.TID)

- Directing Module

- Least Common Frame

- Truncated Stack Trace

- Data Correlation (function parameters)

- Data Correlation (CPU times)

- Module Hint

- Version-Specific Extension

- Cloud Environment

- No Data Types

- Managed Stack Trace

- Managed Stack Trace (Scala)

- Managed Stack Trace (Python)

- Coupled Modules

- Thread Age

- Unsynchronized Dumps

- Pleiades

- Quiet Dump

- Blocking File

- Problem Vocabulary

- Activation Context

- Stack Trace Set

- Double IRP Completion

- Caller-n-Callee

- Annotated Disassembly (JIT .NET code)

- Annotated Disassembly (unmanaged code)

- Handled Exception (user space)

- Handled Exception (.NET CLR)

- Handled Exception (kernel space)

- Duplicate Extension

- Special Thread (.NET CLR)

- Hidden Parameter

- FPU Exception

- Module Variable

- System Object

- Value References

- Debugger Bug

- Empty Stack Trace

- Problem Module

- Disconnected Network Adapter

- Network Packet Buildup

- Unrecognizable Symbolic Information

- Translated Exception

- Regular Data

- Late Crash Dump

- Blocked DPC

- Coincidental Error Code

- Punctuated Memory Leak

- No Current Thread

- Value Adding Process

- Activity Resonance

- Stored Exception

- Spike Interval

- Stack Trace Change

- Unloaded Module

- Deviant Module

- Paratext

- Incomplete Session

- Error Reporting Fault

- First Fault Stack Trace

- Frozen Process

- Disk Packet Buildup

- Hidden Process

- Active Thread (Mac OS X)

- Active Thread (Windows)

- Critical Stack Trace

- Handle Leak

- Module Collection

- Module Collection (predicate)

- Deviant Token

- Step Dumps

- Broken Link

- Debugger Omission

- Glued Stack Trace

- Reduced Symbolic Information

- Injected Symbols

- Distributed Wait Chain

- One-Thread Process

- Module Product Process

- Crash Signature Invariant

- Small Value

- Shared Structure

- Thread Cluster

- False Effective Address

- Screwbolt Wait Chain

- Design Value

- Hidden IRP

- Tampered Dump

- Memory Fluctuation (process heap)

- Last Object

- Rough Stack Trace (unmanaged space)

- Rough Stack Trace (managed space)

- Past Stack Trace

- Ghost Thread

- Dry Weight

- Exception Module

- Reference Leak

- Origin Module

- Hidden Call

- Corrupt Structure

- Software Exception

- Crashed Process

- Variable Subtrace

- User Space Evidence

- Internal Stack Trace

- Distributed Exception (managed code)

- Thread Poset

- Stack Trace Surface

- Hidden Stack Trace

- Evental Dumps

- Clone Dump

- Parameter Flow

- Critical Region

- Diachronic Module

- Constant Subtrace

- Not My Thread

- Window Hint

- Place Trace

- Stack Trace Signature

- Relative Memory Leak

- Quotient Stack Trace

- Module Stack Trace

- Foreign Module Frame

- Unified Stack Trace

- Mirror Dump Set

- Memory Fibration

- Aggregated Frames

- Frame Regularity

- Stack Trace Motif

- System Call

- Stack Trace Race

- Hyperdump

- Disassembly Ambiguity

- Exception Reporting Thread

- Active Space

- Subsystem Modules

- Region Profile

- Region Clusters

- Source Stack Trace

- Hidden Stack

- Interrupt Stack

- False Memory

- Frame Trace

- Pointer Cone

- Context Pointer

- Pointer Class

- False Frame

- Procedure Call Chain

- C++ Object

- COM Exception

- Structure Sheaf

- Saved Exception Context (.NET)

- Shared Thread

- Spiking Interrupts

- Structure Field Collection

- Black Box

- Rough Stack Trace Collection (unmanaged space)

- COM Object

- Shared Page

- Exception Collection

- Dereference Nearpoint

- Address Representations

- Near Exception

- Shadow Stack Trace

- Past Process

- Foreign Stack

- Annotated Stack Trace

- Disassembly Summary

- Region Summary

- Analysis Summary

- Region Spectrum

- Normalized Region

- Function Pointer

- Interrupt Stack Collection

- DPC Stack Collection

- Dump Context

- False Local Address

- Encoded Pointer

- Latent Structure

- ISA-Specific Code

Debugging and Category Theory

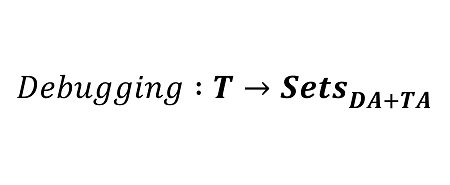

What is debugging? There are many definitions out there, including analogies with forensic science, victimology, and criminology. There are also definitions involving set theory. They focus on the content of debugging artifacts such as source code and its execution paths and values. We give a different definition based on debugging actions and using category theory. We also do not use mathematical notation in what follows.

What is the category theory? We do not give a precise mathematical definition based on axioms but provide a conceptual one as a worldview while omitting many details. A category is a collection of objects and associated arrows between them. Every pair of objects has a collection of arrows between them, which can be empty. So an arrow must have a source and a target object. Several sequential arrows can be composed into one arrow. We can even consider arrows as objects themselves, but this is another category with its new arrows between arrows as objects. If we consider categories as objects and arrows between these categories as objects, we have another category. So we can quickly build complex models out of that.

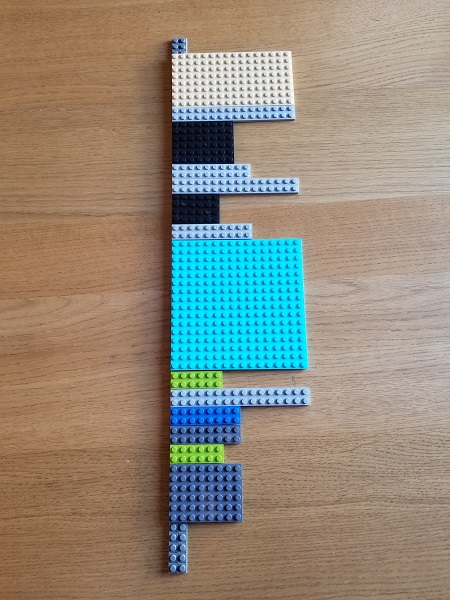

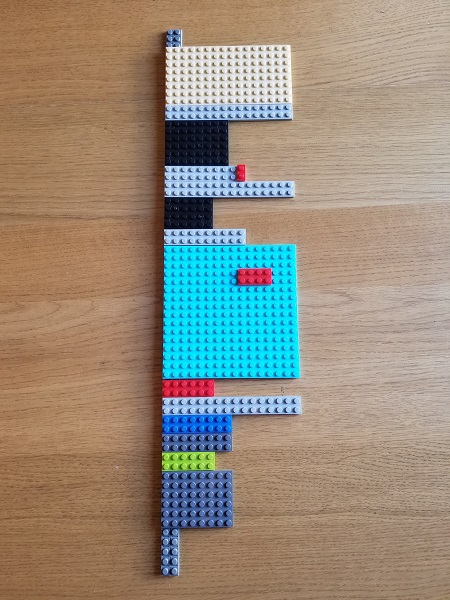

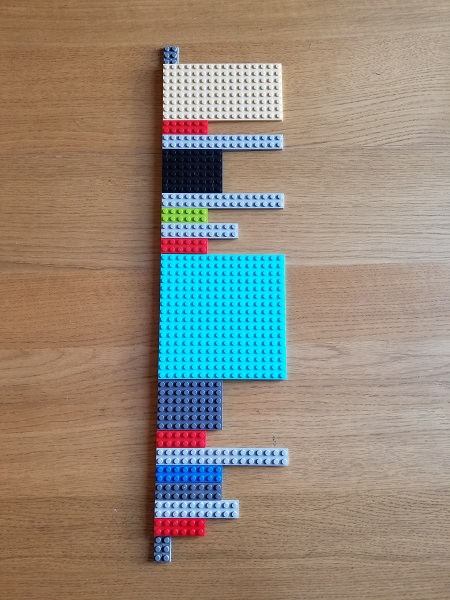

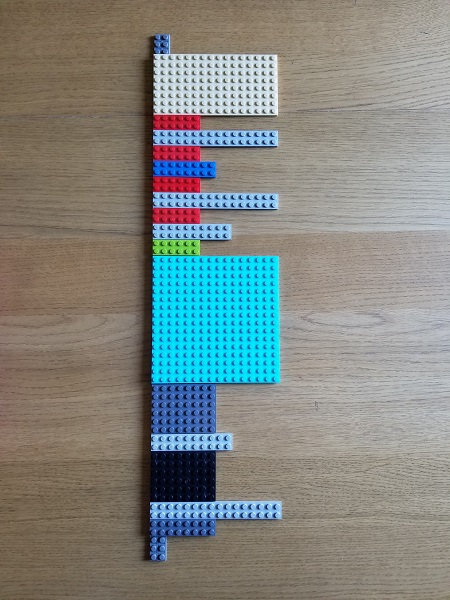

Can we build a conceptual model of debugging using objects and arrows? Yes, and it even has a particular name in category theory: a presheaf. So, debugging is a presheaf. To answer a question, what is a presheaf, we start constructing our debugging model focusing on objects and arrows. To avoid using mathematical language that may obscure debugging concepts, we use LEGO® bricks because we can feel the objects and arrows, and most importantly, arrows as objects (see visual category theory for more details). This hands-on activity also reminds us that debugging is a construction process.

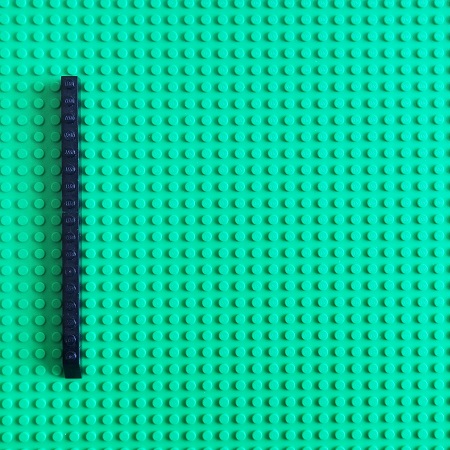

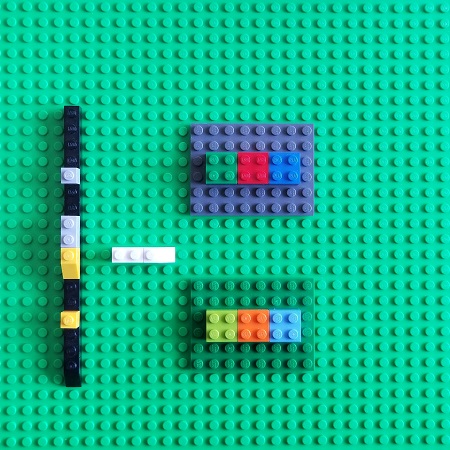

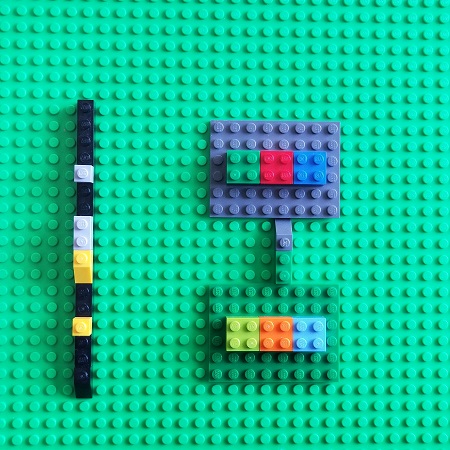

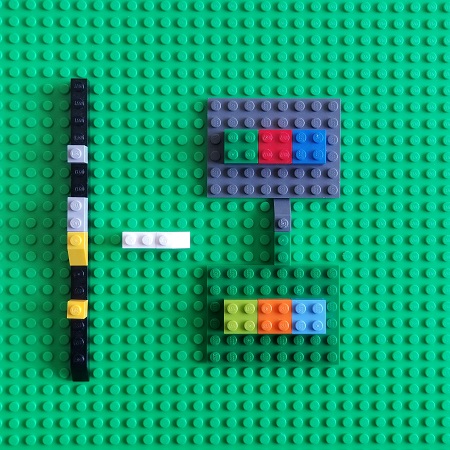

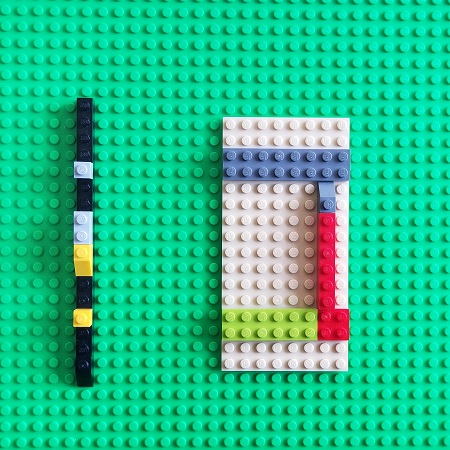

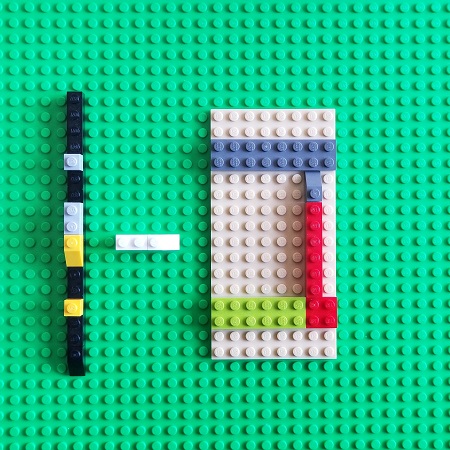

Debugging activity involves time. We, therefore, construct a time arrow that represents software execution:

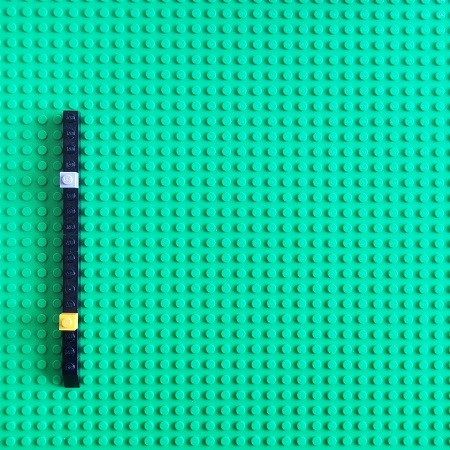

We pick two Time objects representing different execution times:

In our Time category, an arrow means the flow of time. It can also be some indexing scheme for time events or other objects (a different category) that represents some repeated activity. Please note that an arrow has specific object indicators assigned to it. Different object pairs have different arrows. It is not apparent when we use black and white mathematical notation and diagrams.

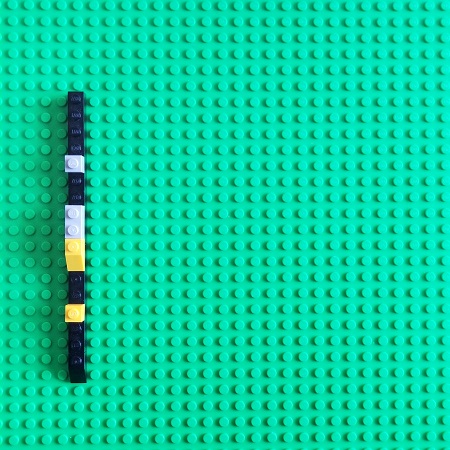

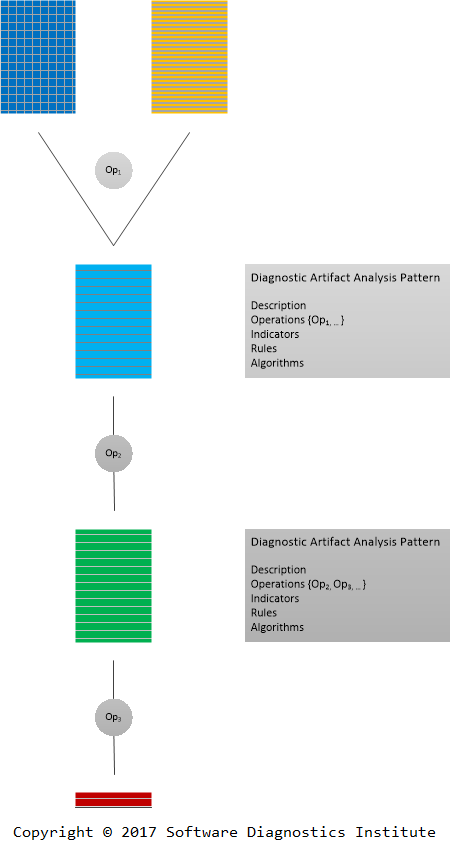

We can associate with Time objects some external objects, for example, memory snapshots, or some other software execution states, variables, execution artifacts, or even parts of the same artifact:

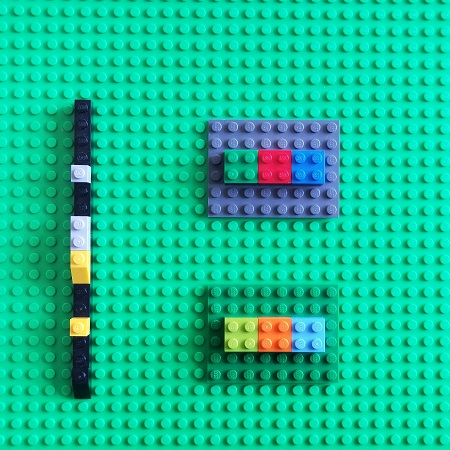

Therefore, we have a possible mapping from the Time category to a possible category of software execution artifacts that we name DA+TA (abbreviated [memory] dump artifacts + trace [log] artifacts). DA+TA objects are simply some sets useful for debugging. The mapping between different categories is usually called a functor in category theory. It maps objects from the source category to objects in the target category. It is itself an arrow in the category that includes source and target categories as objects:

However, we forgot to designate arrows in the target DA+TA category. Of course, a different choice of arrows makes different categories. We choose arrows that represent debugging activities such as going back in time when trying to find the root cause, such as walking a stack trace. It is a reverse activity:

A functor that maps arrows to reversed arrows is called a contravariant functor in category theory:

Such a contravariant functor from a category to the category of some sets is called a presheaf. Now we look at debugging using software traces and logs as another target category of sets. With our Time category objects, we associate different log messages:

When we use log and trace files for debugging we also go back in time trying to find the root cause message (or a set of messages) or some other clues:

Again, we have a presheaf, a contravariant functor that maps our Time category objects to sets of messages:

So, we see again, that debugging is a presheaf, a contravariant functor that maps software execution categories such as a category of time instants to sets of software execution artifacts.

Trace and log analysis pattern catalog includes another example of the source and target categories candidates for a debugging presheaf, Trace Presheaf analysis pattern that maps trace messages to memory snapshots (sets of memory cells or some other state information).

Presheaves can be mapped to each other, for example, from a presheaf of logs to a presheaf of associated source code fragments or stack traces, and this is called a natural transformation in category theory. It also fits with natural debugging when we go back in logs and, at the same time, browse source code or some other associated information sets.

This article is also available in PDF format.

Software Construction Brick by Brick

In the past, we used LEGO® bricks to represent some simple data structures and software logs, and recently, more complex data structures and algorithms, so all that transformed into a series of manageable short books (increments) to facilitate earlier adoption and feedback. Software diagnostics is an integral part of software construction and software post-construction problem-solving, and we aim to provide real hands-on training from general concepts and architecture to low-level details.

Increment 1 (ISBN-13: 978-1912636709) is currently available on Leanpub and Amazon Kindle Store. It covers memory, memory addresses, pointers, program loading, kernel and user spaces, virtual process space, memory isolation, virtual and physical memory, memory paging, memory dump types.

Machine Learning Brick by Brick

In the past, we used LEGO® bricks to represent some simple data structures and software logs, so all that transformed into a series of manageable short books (epochs) to facilitate earlier adoption and feedback. Machine learning is now an integral part of pattern-oriented software diagnostics, and we aim to provide real hands-on training from general concepts and architecture to low-level details and mathematics.

Epoch 1 (ISBN-13: 978-1912636501) is currently available on Leanpub and Amazon Kindle Store. It covers the simplest linear associative network, proposes a brick notation for algebraic expressions, shows required calculus derivations, and illustrates gradient descent.

Memory Dump Analysis Anthology, Volume 1, Revised Edition

The new Revised Edition is available!

The following direct links can be used to order the English edition now:

Buy PDF and EPUB from Leanpub

Buy Kindle Print Replica from Amazon

Also available in PDF and EPUB formats from Software Diagnostics Services.

This reference volume consists of revised, edited, cross-referenced, and thematically organized articles from Software Diagnostics Institute and Software Diagnostics Library (former Crash Dump Analysis blog) written in August 2006 - December 2007. This major revision updates tool information and links with ones relevant for Windows 10 and removes obsolete references. Some articles are preserved for historical reasons, and some are updated to reflect the debugger engine changes. The output of WinDbg commands is also remastered to include color highlighting. Most of the content, especially memory analysis pattern language, is still relevant today and for the foreseeable future. Crash dump analysis pattern names are also corrected to reflect the continued expansion of the catalog.

The primary audience for Memory Dump Analysis Anthology reference volumes is: software engineers developing and maintaining products on Windows platforms, technical support, escalation, and site reliability engineers dealing with complex software issues, quality assurance engineers testing software on Windows platforms, security and vulnerability researchers, reverse engineers, malware and memory forensics analysts.

- Title: Memory Dump Analysis Anthology, Volume 1, Revised Edition

- Authors: Dmitry Vostokov, Software Diagnostics Institute

- Publisher: OpenTask (April 2020)

- Language: English

- Product Dimensions: 22.86 x 15.24

- PDF: 713 pages

- ISBN-13: 978-1912636211

The original Korean edition is also available:

The following direct links can be used to order the Korean edition now:

Acorn (The Korean translation publisher) or Kyobo book or Yes24.com

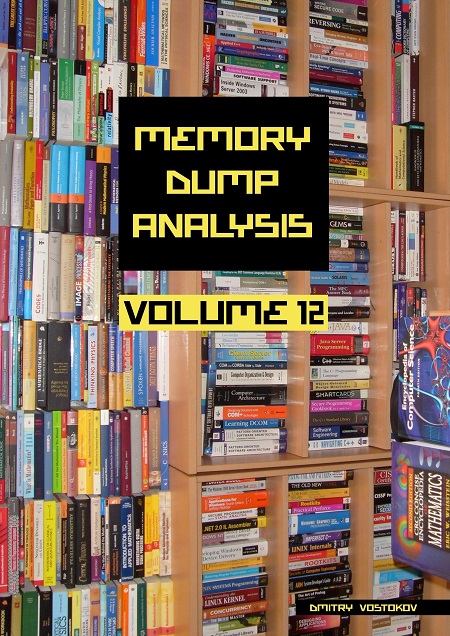

Memory Dump Analysis Anthology, Volume 12

The following direct links can be used to order the book:

Buy Kindle print replica edition from Amazon

Also available in PDF format from Software Diagnostics Services

This reference volume consists of revised, edited, cross-referenced, and thematically organized selected articles from Software Diagnostics Institute (DumpAnalysis.org + TraceAnalysis.org) and Software Diagnostics Library (former Crash Dump Analysis blog, DumpAnalysis.org/blog) about software diagnostics, root cause analysis, debugging, crash and hang dump analysis, software trace and log analysis written in December 2018 - November 2019 for software engineers developing and maintaining products on Windows and Linux platforms, quality assurance engineers testing software, technical support, escalation and site reliability engineers dealing with complex software issues, security researchers, reverse engineers, malware and memory forensics analysts. This volume is fully cross-referenced with volumes 1 – 11 and features:

- 6 new crash dump analysis patterns with selected downloadable example memory dumps

- 2 pattern interaction case studies including Python crash dump analysis

- 16 new software trace and log analysis patterns

- Introduction to software pathology

- Introduction to graphical representation of software traces and logs

- Introduction to space-like narratology as application of trace and log analysis patterns to image analysis

- Introduction to analysis pattern duality

- Introduction to machine learning square and its relationship with the state of the art of pattern-oriented diagnostics

- Historical reminiscences on 10 years of trace and log analysis patterns and software narratology

- Introduction to baseplate representation of chemical structures

- WinDbg notes

- Using C++ as a scripting tool

- List of recommended Linux kernel space books

- Volume index of memory dump analysis patterns

- Volume index of trace and log analysis patterns

Product information:

- Title: Memory Dump Analysis Anthology, Volume 12

- Authors: Dmitry Vostokov, Software Diagnostics Institute

- Language: English

- Product Dimensions: 22.86 x 15.24

- Paperback: 179 pages

- Publisher: OpenTask (December 2019)

- ISBN-13: 978-1-912636-12-9

Writing Bad Code: Software Defect Construction, Simulation and Modeling of Software Bugs

This book is planned for early 2020 (ISBN: 978-1906717759).

Python Crash Dump Analysis Case Study

When working on Region Profile memory analysis pattern, we decided to combine two separate Pandas profiling scripts into one:

import pandas as pd

import pandas_profiling

df = pd.read_csv("stack.csv")

html_file = open("stack.html", "w")

html_file.write (pandas_profiling.ProfileReport(df).to_html())

html_file.close()

df = pd.read_csv("stack4columns.csv")

html_file = open("stack4columns.html", "w")

html_file.write (pandas_profiling.ProfileReport(df).to_html())

html_file.close()

Unfortunately, python.exe crashed. Since we always configure LocalDumps to catch interesting crashes we got python.exe.2140.dmp. We promptly loaded it into Microsoft WinDbg Debugger (or WinDbg Preview, see quick download links) and saw Self-Diagnosis from Top Module in Exception Stack Trace (if we ignore exception processing function calls):

0:020> k

# Child-SP RetAddr Call Site

00 00000064`007bbb08 00007ffd`3cac7ff7 ntdll!NtWaitForMultipleObjects+0x14

01 00000064`007bbb10 00007ffd`3cac7ede KERNELBASE!WaitForMultipleObjectsEx+0x107

02 00000064`007bbe10 00007ffd`3f6871fb KERNELBASE!WaitForMultipleObjects+0xe

03 00000064`007bbe50 00007ffd`3f686ca8 kernel32!WerpReportFaultInternal+0x51b

04 00000064`007bbf70 00007ffd`3cb6f848 kernel32!WerpReportFault+0xac

05 00000064`007bbfb0 00007ffd`3f7c4af2 KERNELBASE!UnhandledExceptionFilter+0x3b8

06 00000064`007bc0d0 00007ffd`3f7ac6e6 ntdll!RtlUserThreadStart$filt$0+0xa2

07 00000064`007bc110 00007ffd`3f7c120f ntdll!_C_specific_handler+0x96

08 00000064`007bc180 00007ffd`3f78a299 ntdll!RtlpExecuteHandlerForException+0xf

09 00000064`007bc1b0 00007ffd`3f7bfe7e ntdll!RtlDispatchException+0x219

0a 00000064`007bc8c0 00007ffd`01f5735d ntdll!KiUserExceptionDispatch+0x2e

0b 00000064`007bd070 00007ffd`01f57392 tcl86t!Tcl_PanicVA+0x13d

0c 00000064`007bd0f0 00007ffd`01e83884 tcl86t!Tcl_Panic+0x22

0d 00000064`007bd120 00007ffd`01e86393 tcl86t!Tcl_AsyncDelete+0x114

0e 00000064`007bd150 00007ffd`234c414c tcl86t!Tcl_DeleteInterp+0xf3

0f 00000064`007bd1a0 00007ffc`ef4f728d _tkinter!PyInit__tkinter+0x14bc

10 00000064`007bd1d0 00007ffc`ef4f5706 python37!PyObject_Hash+0x349

11 00000064`007bd210 00007ffc`ef4f71ed python37!PyDict_GetItem+0x4a6

12 00000064`007bd260 00007ffc`ef53c803 python37!PyObject_Hash+0x2a9

13 00000064`007bd2a0 00007ffc`ef4e0673 python37!PyErr_NoMemory+0xe49f

14 00000064`007bd2d0 00007ffc`ef514ee4 python37!PyCFunction_NewEx+0x137

15 00000064`007bd300 00007ffc`ef514b20 python37!PyMethod_ClearFreeList+0x568

16 00000064`007bd3a0 00007ffc`ef514add python37!PyMethod_ClearFreeList+0x1a4

17 00000064`007bd3d0 00007ffc`ef538500 python37!PyMethod_ClearFreeList+0x161

18 00000064`007bd400 00007ffc`ef4da197 python37!PyErr_NoMemory+0xa19c

19 00000064`007bd430 00007ffc`ef50e77d python37!PyObject_GetIter+0x1f

1a 00000064`007bd460 00007ffc`ef50b146 python37!PyEval_EvalFrameDefault+0xa4d

1b 00000064`007bd5a0 00007ffc`ef50dbcc python37!PyEval_EvalCodeWithName+0x1a6

1c 00000064`007bd640 00007ffc`ef50e1df python37!PyMethodDef_RawFastCallKeywords+0xccc

1d 00000064`007bd700 00007ffc`ef50b146 python37!PyEval_EvalFrameDefault+0x4af

1e 00000064`007bd840 00007ffc`ef4d358b python37!PyEval_EvalCodeWithName+0x1a6

*** WARNING: Unable to verify checksum for lib.cp37-win_amd64.pyd

1f 00000064`007bd8e0 00007ffd`02bb9163 python37!PyEval_EvalCodeEx+0x9b

20 00000064`007bd970 00007ffd`02bb8c82 lib_cp37_win_amd64+0x9163

21 00000064`007bd9f0 00007ffd`02bda552 lib_cp37_win_amd64+0x8c82

22 00000064`007bda20 00007ffd`02bdaeed lib_cp37_win_amd64!PyInit_lib+0x1c542

23 00000064`007bdb50 00007ffc`ef50d255 lib_cp37_win_amd64!PyInit_lib+0x1cedd

24 00000064`007bdbe0 00007ffc`ef50db17 python37!PyMethodDef_RawFastCallKeywords+0x355

25 00000064`007bdc60 00007ffc`ef50ed2a python37!PyMethodDef_RawFastCallKeywords+0xc17

26 00000064`007bdd20 00007ffc`ef50b146 python37!PyEval_EvalFrameDefault+0xffa

27 00000064`007bde60 00007ffc`ef50dbcc python37!PyEval_EvalCodeWithName+0x1a6

28 00000064`007bdf00 00007ffc`ef50e5e2 python37!PyMethodDef_RawFastCallKeywords+0xccc

29 00000064`007bdfc0 00007ffc`ef50dab3 python37!PyEval_EvalFrameDefault+0x8b2

2a 00000064`007be100 00007ffc`ef50e1df python37!PyMethodDef_RawFastCallKeywords+0xbb3

2b 00000064`007be1c0 00007ffc`ef50dab3 python37!PyEval_EvalFrameDefault+0x4af

2c 00000064`007be300 00007ffc`ef50e5e2 python37!PyMethodDef_RawFastCallKeywords+0xbb3

2d 00000064`007be3c0 00007ffc`ef50dab3 python37!PyEval_EvalFrameDefault+0x8b2

2e 00000064`007be500 00007ffc`ef50e1df python37!PyMethodDef_RawFastCallKeywords+0xbb3

2f 00000064`007be5c0 00007ffc`ef50a3bd python37!PyEval_EvalFrameDefault+0x4af

30 00000064`007be700 00007ffc`ef4dc53b python37!PyFunction_FastCallDict+0xdd

31 00000064`007be7d0 00007ffc`ef5be47b python37!PyObject_Call+0xd3

32 00000064`007be800 00007ffc`ef5029cd python37!Py_hashtable_size+0x3e63

33 00000064`007be830 00007ffc`ef520b7b python37!PyList_Extend+0x1f1

34 00000064`007be870 00007ffc`ef520b3f python37!PyBuiltin_Init+0x587

35 00000064`007be8a0 00007ffc`ef4f78a7 python37!PyBuiltin_Init+0x54b

36 00000064`007be8e0 00007ffc`ef4f763a python37!PyObject_FastCallKeywords+0x3e7

37 00000064`007be910 00007ffc`ef50dbfe python37!PyObject_FastCallKeywords+0x17a

38 00000064`007be970 00007ffc`ef50e1df python37!PyMethodDef_RawFastCallKeywords+0xcfe

39 00000064`007bea30 00007ffc`ef50a3bd python37!PyEval_EvalFrameDefault+0x4af

3a 00000064`007beb70 00007ffc`ef4de47d python37!PyFunction_FastCallDict+0xdd

3b 00000064`007bec40 00007ffc`ef50eea5 python37!PyObject_IsAbstract+0x1b1

3c 00000064`007bec80 00007ffc`ef50b146 python37!PyEval_EvalFrameDefault+0x1175

3d 00000064`007bedc0 00007ffc`ef50a49a python37!PyEval_EvalCodeWithName+0x1a6

3e 00000064`007bee60 00007ffc`ef4de47d python37!PyFunction_FastCallDict+0x1ba

3f 00000064`007bef30 00007ffc`ef50eea5 python37!PyObject_IsAbstract+0x1b1

40 00000064`007bef70 00007ffc`ef50dab3 python37!PyEval_EvalFrameDefault+0x1175

41 00000064`007bf0b0 00007ffc`ef50e133 python37!PyMethodDef_RawFastCallKeywords+0xbb3

42 00000064`007bf170 00007ffc`ef50dab3 python37!PyEval_EvalFrameDefault+0x403

43 00000064`007bf2b0 00007ffc`ef50e133 python37!PyMethodDef_RawFastCallKeywords+0xbb3

44 00000064`007bf370 00007ffc`ef50a3bd python37!PyEval_EvalFrameDefault+0x403

45 00000064`007bf4b0 00007ffc`ef50a1de python37!PyFunction_FastCallDict+0xdd

46 00000064`007bf580 00007ffc`ef4de3f4 python37!PyMember_GetOne+0x732

47 00000064`007bf610 00007ffc`ef50eea5 python37!PyObject_IsAbstract+0x128

48 00000064`007bf650 00007ffc`ef50a3bd python37!PyEval_EvalFrameDefault+0x1175

49 00000064`007bf790 00007ffc`ef51e834 python37!PyFunction_FastCallDict+0xdd

4a 00000064`007bf860 00007ffc`ef51e7a1 python37!PyObject_Call_Prepend+0x6c

4b 00000064`007bf8f0 00007ffc`ef4dc4dd python37!PyDict_Contains+0x6d5

4c 00000064`007bf920 00007ffc`ef5d7a4e python37!PyObject_Call+0x75

4d 00000064`007bf950 00007ffc`ef67894a python37!PySignal_AfterFork+0x157a

4e 00000064`007bf980 00007ffd`3cd6d9f2 python37!PyThread_tss_is_created+0xde

4f 00000064`007bf9b0 00007ffd`3f637bd4 ucrtbase!thread_start<unsigned int (__cdecl*)(void *),1>+0x42

50 00000064`007bf9e0 00007ffd`3f78cee1 kernel32!BaseThreadInitThunk+0x14

51 00000064`007bfa10 00000000`00000000 ntdll!RtlUserThreadStart+0x21

We noticed PyErr_NoMemory function calls, but the offset was too big (0xe49f) that we considered that Incorrect Symbolic Information. Indeed, there were only export symbols available:

0:020> lmv m python37

Browse full module list

start end module name

00007ffc`ef4d0000 00007ffc`ef88f000 python37 (export symbols) python37.dll

Loaded symbol image file: python37.dll

Image path: C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python37_64\python37.dll

Image name: python37.dll

Browse all global symbols functions data

Timestamp: Mon Mar 25 22:22:41 2019 (5C9954B1)

CheckSum: 00396A11

ImageSize: 003BF000

File version: 3.7.3150.1013

Product version: 3.7.3150.1013

File flags: 0 (Mask 3F)

File OS: 4 Unknown Win32

File type: 2.0 Dll

File date: 00000000.00000000

Translations: 0000.04b0

Information from resource tables:

CompanyName: Python Software Foundation

ProductName: Python

InternalName: Python DLL

OriginalFilename: python37.dll

ProductVersion: 3.7.3

FileVersion: 3.7.3

FileDescription: Python Core

LegalCopyright: Copyright © 2001-2016 Python Software Foundation. Copyright © 2000 BeOpen.com. Copyright © 1995-2001 CNRI. Copyright © 1991-1995 SMC.

Fortunately, this Python installation came with PDB files, so we provided a path to them:

0:020> .sympath+ C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python37_64

Symbol search path is: srv*;C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python37_64

Expanded Symbol search path is: cache*;SRV*https://msdl.microsoft.com/download/symbols;

c:\program files (x86)\microsoft visual studio\shared\python37_64

************* Path validation summary **************

Response Time (ms) Location

Deferred srv*

OK C:\Program Files (x86)\Microsoft Visual Studio\Shared\Python37_64

Then we got the better stack trace:

0:020> .ecxr

rax=0000000000000000 rbx=00007ffd01fd0e60 rcx=0000000000002402

rdx=00007ffd3ce3b770 rsi=00007ffd02000200 rdi=000001b38a51d9c0

rip=00007ffd01f5735d rsp=00000064007bd070 rbp=0000000000000002

r8=00000064007bc828 r9=00000064007bc920 r10=0000000000000000

r11=00000064007bcf70 r12=000001b38a51d9c0 r13=000001b38a51d8c0

r14=000001b38a51d9c0 r15=00007ffd01e86393

iopl=0 nv up ei pl nz na pe nc

cs=0033 ss=002b ds=002b es=002b fs=0053 gs=002b efl=00000202

tcl86t!Tcl_PanicVA+0x13d:

00007ffd`01f5735d cc int 3

0:020> kL

*** Stack trace for last set context - .thread/.cxr resets it

# Child-SP RetAddr Call Site

00 00000064`007bd070 00007ffd`01f57392 tcl86t!Tcl_PanicVA+0x13d

01 00000064`007bd0f0 00007ffd`01e83884 tcl86t!Tcl_Panic+0x22

02 00000064`007bd120 00007ffd`01e86393 tcl86t!Tcl_AsyncDelete+0x114

03 00000064`007bd150 00007ffd`234c414c tcl86t!Tcl_DeleteInterp+0xf3

04 00000064`007bd1a0 00007ffc`ef4f728d _tkinter!PyInit__tkinter+0x14bc

05 00000064`007bd1d0 00007ffc`ef4f5706 python37!dict_dealloc+0x22d

06 00000064`007bd210 00007ffc`ef4f71ed python37!subtype_dealloc+0x176

07 (Inline Function) --------`-------- python37!free_keys_object+0xf5

08 00000064`007bd260 00007ffc`ef53c803 python37!dict_dealloc+0x18d

09 00000064`007bd2a0 00007ffc`ef4e0673 python37!subtype_clear+0x5b967

0a 00000064`007bd2d0 00007ffc`ef514ee4 python37!delete_garbage+0x4b

0b 00000064`007bd300 00007ffc`ef514b20 python37!collect+0x184

0c 00000064`007bd3a0 00007ffc`ef514add python37!collect_with_callback+0x34

0d 00000064`007bd3d0 00007ffc`ef538500 python37!collect_generations+0x4d

0e (Inline Function) --------`-------- python37!_PyObject_GC_Alloc+0x5e2ec

0f (Inline Function) --------`-------- python37!_PyObject_GC_Malloc+0x5e2ec

10 (Inline Function) --------`-------- python37!_PyObject_GC_New+0x5e2f3

11 00000064`007bd400 00007ffc`ef4da197 python37!tuple_iter+0x5e310

12 00000064`007bd430 00007ffc`ef50e77d python37!PyObject_GetIter+0x1f

13 00000064`007bd460 00007ffc`ef50b146 python37!_PyEval_EvalFrameDefault+0xa4d

14 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

15 00000064`007bd5a0 00007ffc`ef50dbcc python37!_PyEval_EvalCodeWithName+0x1a6

16 (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0x1ca

17 00000064`007bd640 00007ffc`ef50e1df python37!call_function+0x3ac

18 00000064`007bd700 00007ffc`ef50b146 python37!_PyEval_EvalFrameDefault+0x4af

19 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

1a 00000064`007bd840 00007ffc`ef4d358b python37!_PyEval_EvalCodeWithName+0x1a6

1b 00000064`007bd8e0 00007ffd`02bb9163 python37!PyEval_EvalCodeEx+0x9b

1c 00000064`007bd970 00007ffd`02bb8c82 lib_cp37_win_amd64+0x9163

1d 00000064`007bd9f0 00007ffd`02bda552 lib_cp37_win_amd64+0x8c82

1e 00000064`007bda20 00007ffd`02bdaeed lib_cp37_win_amd64!PyInit_lib+0x1c542

1f 00000064`007bdb50 00007ffc`ef50d255 lib_cp37_win_amd64!PyInit_lib+0x1cedd

20 00000064`007bdbe0 00007ffc`ef50db17 python37!_PyMethodDef_RawFastCallKeywords+0x355

21 (Inline Function) --------`-------- python37!_PyCFunction_FastCallKeywords+0x22

22 00000064`007bdc60 00007ffc`ef50ed2a python37!call_function+0x2f7

23 00000064`007bdd20 00007ffc`ef50b146 python37!_PyEval_EvalFrameDefault+0xffa

24 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

25 00000064`007bde60 00007ffc`ef50dbcc python37!_PyEval_EvalCodeWithName+0x1a6

26 (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0x1ca

27 00000064`007bdf00 00007ffc`ef50e5e2 python37!call_function+0x3ac

28 00000064`007bdfc0 00007ffc`ef50dab3 python37!_PyEval_EvalFrameDefault+0x8b2

29 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

2a (Inline Function) --------`-------- python37!function_code_fastcall+0x5e

2b (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0xb1

2c 00000064`007be100 00007ffc`ef50e1df python37!call_function+0x293

2d 00000064`007be1c0 00007ffc`ef50dab3 python37!_PyEval_EvalFrameDefault+0x4af

2e (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

2f (Inline Function) --------`-------- python37!function_code_fastcall+0x5e

30 (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0xb1

31 00000064`007be300 00007ffc`ef50e5e2 python37!call_function+0x293

32 00000064`007be3c0 00007ffc`ef50dab3 python37!_PyEval_EvalFrameDefault+0x8b2

33 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

34 (Inline Function) --------`-------- python37!function_code_fastcall+0x5e

35 (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0xb1

36 00000064`007be500 00007ffc`ef50e1df python37!call_function+0x293

37 00000064`007be5c0 00007ffc`ef50a3bd python37!_PyEval_EvalFrameDefault+0x4af

38 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

39 (Inline Function) --------`-------- python37!function_code_fastcall+0x5c

3a 00000064`007be700 00007ffc`ef4dc53b python37!_PyFunction_FastCallDict+0xdd

3b 00000064`007be7d0 00007ffc`ef5be47b python37!PyObject_Call+0xd3

3c 00000064`007be800 00007ffc`ef5029cd python37!starmap_next+0x67

3d 00000064`007be830 00007ffc`ef520b7b python37!list_extend+0x1e9

3e 00000064`007be870 00007ffc`ef520b3f python37!list___init___impl+0x27

3f 00000064`007be8a0 00007ffc`ef4f78a7 python37!list___init__+0x67

40 00000064`007be8e0 00007ffc`ef4f763a python37!type_call+0xa7

41 00000064`007be910 00007ffc`ef50dbfe python37!_PyObject_FastCallKeywords+0x17a

42 00000064`007be970 00007ffc`ef50e1df python37!call_function+0x3de

43 00000064`007bea30 00007ffc`ef50a3bd python37!_PyEval_EvalFrameDefault+0x4af

44 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

45 (Inline Function) --------`-------- python37!function_code_fastcall+0x5c

46 00000064`007beb70 00007ffc`ef4de47d python37!_PyFunction_FastCallDict+0xdd

47 (Inline Function) --------`-------- python37!PyObject_Call+0xcf

48 00000064`007bec40 00007ffc`ef50eea5 python37!do_call_core+0x14d

49 00000064`007bec80 00007ffc`ef50b146 python37!_PyEval_EvalFrameDefault+0x1175

4a (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

4b 00000064`007bedc0 00007ffc`ef50a49a python37!_PyEval_EvalCodeWithName+0x1a6

4c 00000064`007bee60 00007ffc`ef4de47d python37!_PyFunction_FastCallDict+0x1ba

4d (Inline Function) --------`-------- python37!PyObject_Call+0xcf

4e 00000064`007bef30 00007ffc`ef50eea5 python37!do_call_core+0x14d

4f 00000064`007bef70 00007ffc`ef50dab3 python37!_PyEval_EvalFrameDefault+0x1175

50 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

51 (Inline Function) --------`-------- python37!function_code_fastcall+0x5e

52 (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0xb1

53 00000064`007bf0b0 00007ffc`ef50e133 python37!call_function+0x293

54 00000064`007bf170 00007ffc`ef50dab3 python37!_PyEval_EvalFrameDefault+0x403

55 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

56 (Inline Function) --------`-------- python37!function_code_fastcall+0x5e

57 (Inline Function) --------`-------- python37!_PyFunction_FastCallKeywords+0xb1

58 00000064`007bf2b0 00007ffc`ef50e133 python37!call_function+0x293

59 00000064`007bf370 00007ffc`ef50a3bd python37!_PyEval_EvalFrameDefault+0x403

5a (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

5b (Inline Function) --------`-------- python37!function_code_fastcall+0x5c

5c 00000064`007bf4b0 00007ffc`ef50a1de python37!_PyFunction_FastCallDict+0xdd

5d (Inline Function) --------`-------- python37!_PyObject_FastCallDict+0x25

5e (Inline Function) --------`-------- python37!_PyObject_Call_Prepend+0x5a

5f 00000064`007bf580 00007ffc`ef4de3f4 python37!method_call+0x92

60 (Inline Function) --------`-------- python37!PyObject_Call+0x46

61 00000064`007bf610 00007ffc`ef50eea5 python37!do_call_core+0xc4

62 00000064`007bf650 00007ffc`ef50a3bd python37!_PyEval_EvalFrameDefault+0x1175

63 (Inline Function) --------`-------- python37!PyEval_EvalFrameEx+0x17

64 (Inline Function) --------`-------- python37!function_code_fastcall+0x5c

65 00000064`007bf790 00007ffc`ef51e834 python37!_PyFunction_FastCallDict+0xdd

66 00000064`007bf860 00007ffc`ef51e7a1 python37!_PyObject_Call_Prepend+0x6c

67 00000064`007bf8f0 00007ffc`ef4dc4dd python37!slot_tp_call+0x51

68 00000064`007bf920 00007ffc`ef5d7a4e python37!PyObject_Call+0x75

69 00000064`007bf950 00007ffc`ef67894a python37!t_bootstrap+0x6a

6a 00000064`007bf980 00007ffd`3cd6d9f2 python37!bootstrap+0x32

6b 00000064`007bf9b0 00007ffd`3f637bd4 ucrtbase!thread_start<unsigned int (__cdecl*)(void *),1>+0x42

6c 00000064`007bf9e0 00007ffd`3f78cee1 kernel32!BaseThreadInitThunk+0x14

6d 00000064`007bfa10 00000000`00000000 ntdll!RtlUserThreadStart+0x21

We noticed that something wasn’t quite right during Python garbage collection processing, so we disabled GC in our script and the problem was gone for the duration of its execution:

import gc

gc.disable()

Of course, we consider this as a temporary workaround and should add it to our Workaround Patterns catalog.

Pattern-Oriented Anomaly Detection and Analysis

We are preparing an outline of our consistent approach across domains that will be published as a booklet soon (ISBN: 978-1912636068).

Machine Learning Square and Software Diagnostics Institute Roadmap

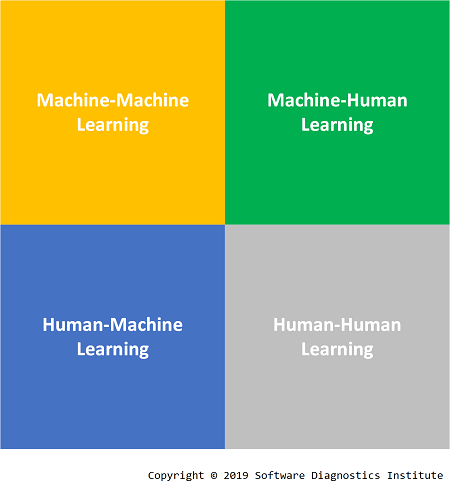

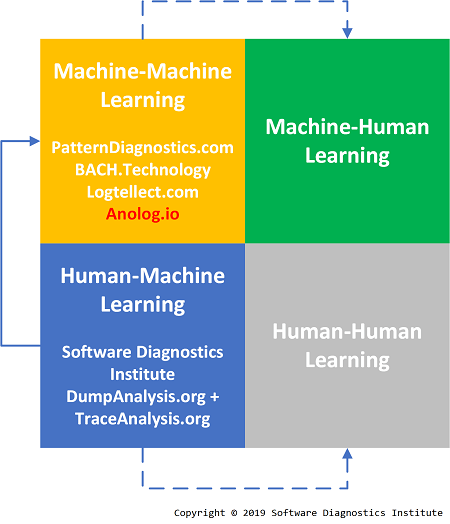

When researching ML from the point of view of sociology and humanities*, we came upon an idea of Machine Learning Machine Learning … sequence. Then we realised that there is a distinction between Machine-Machine Learning (Machine Learning of Machine structure and behaviour) and Machine-Human Learning (Machine Learning of Human state and behaviour, ML approaches to medical diagnostics). This naturally extends to a learning square where we add Human-Machine Learning (Human Learning of machine diagnostics) and Human-Human learning (Human Learning of Human state and behaviour, medicine, humanities, and sociology):

What we were mainly doing before 2018 is devising a set of Human-Machine Learning pattern languages. Recently we moved towards ML approaches, and this activity occupies Machine-Machine Learning quadrant. Since analysis patterns developed for Human-Machine Learning are sufficiently rich to be used in other domains than software results can be applied to Human-Human Learning (for example, narratology), and together with additional results from Machine-Machine Learning can be applied to Machine-Human Learning (for example, space-like narratology for image analysis):

* Machine Learners: Archaeology of a Data Practice, by Adrian Mackenzie (ISBN: 978-0262036825)

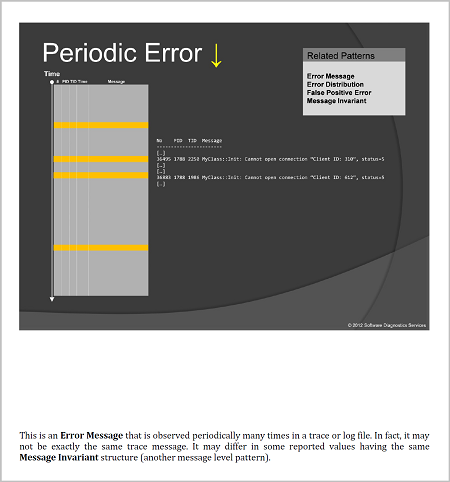

Application of Trace and Log Analysis Patterns to Image Analysis: Introducing Space-like Narratology

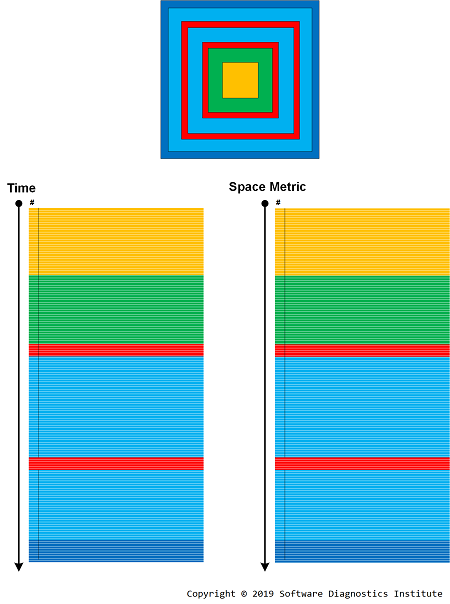

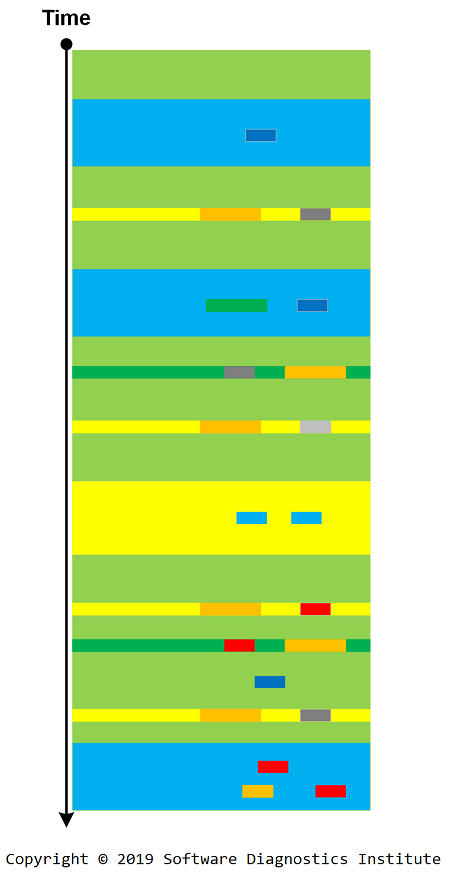

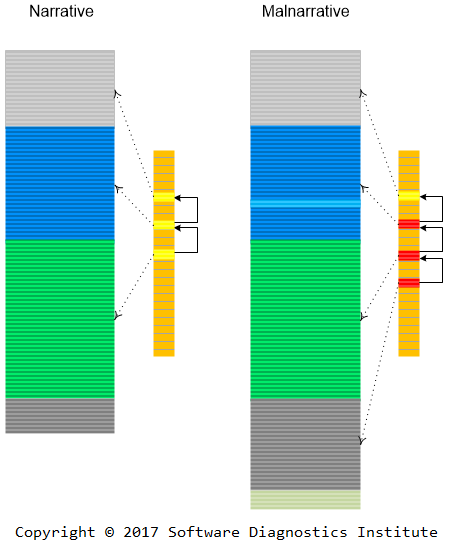

A while ago, we introduced Special and General trace and log analysis with the emphasis on causality. However, both types are still time-like, based on explicit or implicit time ordering. We now extend the same pattern-oriented analysis approach to image analysis where the ordering of “messages,” “events” or simply some “data” is space-like, or even metric-like (with the additional direction if necessary). In the initial step, we replace Time coordinate with some metric based on the nature of data, for example, the case of Periodic Error is shown in this simplified spatial picture:

Similar replacement can be done in case of Time Delta -> Space Delta -> Metric Delta and Discontinuity. We are now assessing the current 170 analysis patterns* (where most of them are time-like) in terms of their applicability to image analysis and submit analysis pattern extensions.

* Trace and Log Analysis: A Pattern Reference for Diagnostics and Anomaly Detection, Third Edition, forthcoming (ISBN: 978-1912636044)

10 Years of Software Narratology

On 12 June, 10 years ago, we were looking for a fruitful foundation for software trace and log analysis beyond a sequence of memory fragments. Coincidently and unconnectedly, at that time, we were learning about narratology from some literary theory textbooks. Then we got an insight about similarities between storytelling and logging. Structures of traditional narrative and their narrative analysis could also guide in devising new trace and log analysis patterns. Thus, Software Narratology was born. Here we reproduce the original blog post screenshot from Software Diagnostics Library:

In a couple of years after that we were contacted by people who were writing software for narratology, so we promptly (in February 2012) decided to organize a webinar to clear the confusion between Software Narratology and narratology software. Here is the webinar logo we designed at that time (colors symbolize kernel, user, and managed memory spaces):

The recording of this webinar is available on YouTube, and slides are available for download. The transcript of that webinar was later published as a short book which is available in various formats. Volume 7 of Memory Dump Analysis Anthology has a section devoted to diverse topics in Software Narratology partially covered in that webinar. Additional Software Narratology articles are available in subsequent volumes. Reflecting on that history, we think the glimpses of Software Narratology also originated with our earlier thinking about historical narratives in the context of memory dump analysis (March 2009).

After a year, we applied software narratological thinking to malware analysis (March 2013): Malware Narratives, with available slides, recording, and published transcript in various formats.

In subsequent years the following ideas were further discovered, invented, and elaborated:

- Generalized Software Narratives (March 2013)

- Hardware Narratology (April 2013)

- Software Narratology of Things (December 2013)

- Higher-order pattern narratives (August 2014)

- Malnarratives (October 2014)

- Articoding (June 2015)

- Graph-theoretic approach (October 2016)

- Narrachain (October 2017)

Despite the move to contemporary mathematics as a source of new trace and log analysis patterns in the recent years, Software Narratology experiences its second revolution through the application of Future Narratives* to diagnostic analysis and debugging processes (Bifurcation Point, Polytrace) and the following forthcoming developments:

- Software Narratives as software "autobiographies."

- Cartesian Software Narratives (Cartesian Trace).

- Incorporation of source code into trace analysis via Moduli Traces. We called this originally (June 2013) Generative Software Narratology.

*Future Narratives: Theory, Poetics, and Media-Historical Moment, by Christoph Bode, Rainer Dietrich (ISBN: 978-3110272123)

Analysis Pattern Duality

Some of our memory analysis patterns are parameterized by structural constraints (such as a particular type of space or dump, or a memory region) or objects (for example, synchronization). We recently worked on a few analysis patterns and discovered the type of duality between them when the parameter itself could have related problems:

ProblemPattern(Parameter) <-> ParameterProblem(ProblemPattern)

For example, Insufficient Memory (Stack) vs. Stack Overflow (Insufficient Memory). In the first analysis pattern variant, insufficient memory may be reported because of full stack region and in the second analysis pattern variant, stack overflow is reported because there is not enough memory to expand stack region.

This duality can aid in new pattern discoveries and especially in analyzing possible root causes and their causal mechanisms (software pathology) given the multiplicity of diagnostic indicators when we consider parameters as analysis patterns themselves. Let’s look at another example: Invalid Pointer (Insufficient Memory). It is a common sequence when a memory leak fails memory allocation, and then certain pointers remain uninitialized or NULL. Consider its dual Insufficient Memory (Invalid Pointer) when, for example, memory is not released because some pointer becomes invalid. The latter can happen when memory is overwritten with NULL values, or access violation is handled and ignored.

The closest mathematical analogy here is order duality. It is different from the duality of software artifacts, such as logs and memory dumps, and memory space dualities.

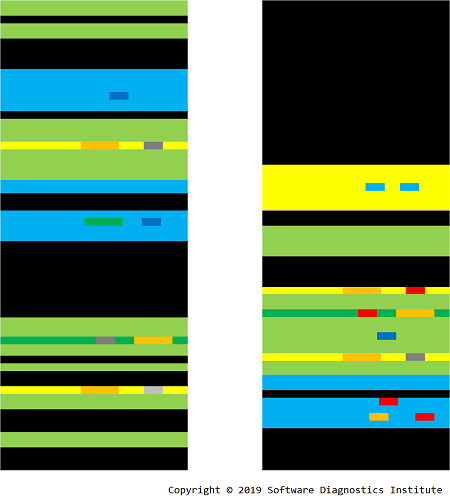

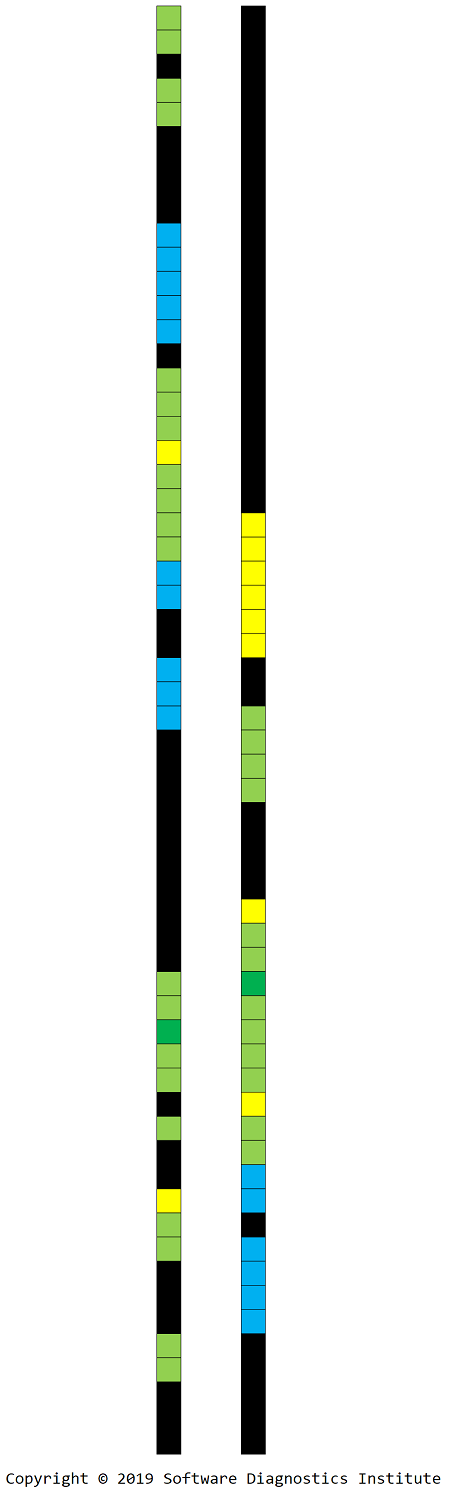

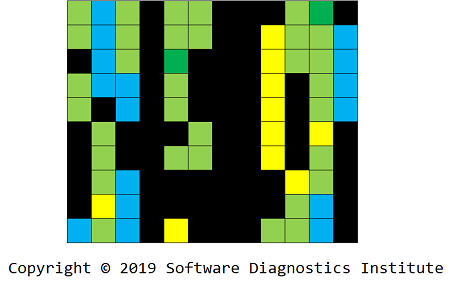

Log’s Loxels and Trace Message’s Mexels Graphical Representation of Software Traces and Logs

Our system and method stem from texels, voxels, and pixels as elements of textures, 3D and 2D picture representation grids and the way we depict traces and logs in Dia|gram graphical diagnostic analysis language:

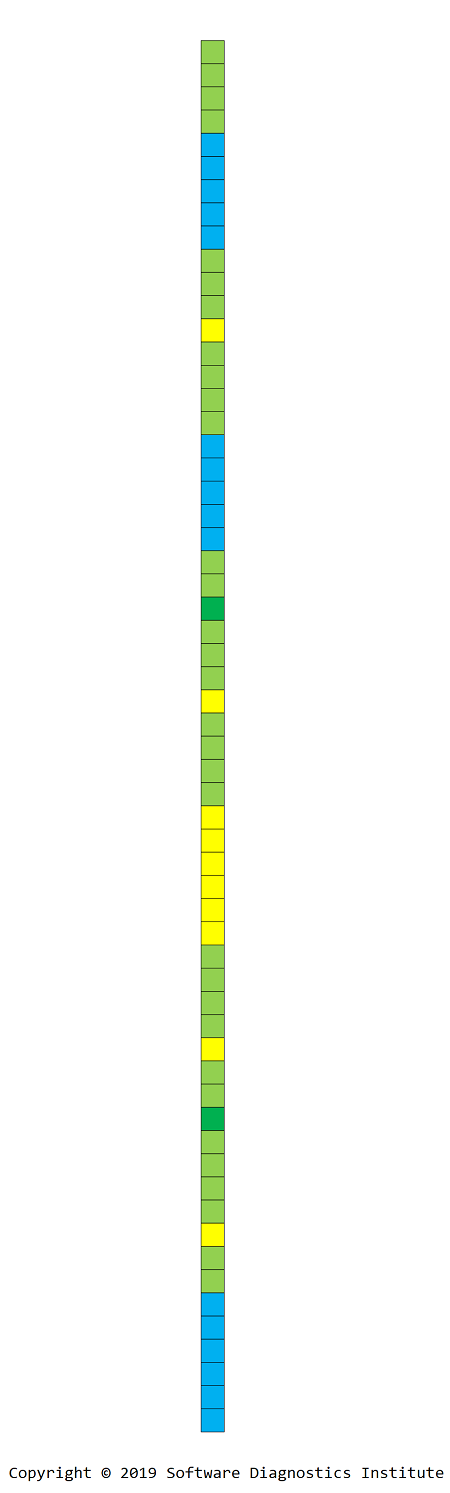

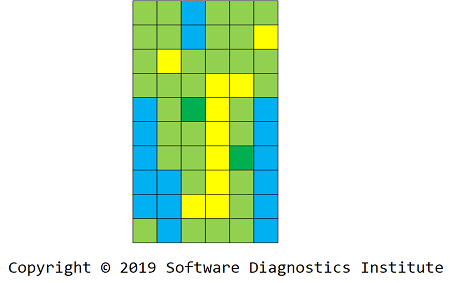

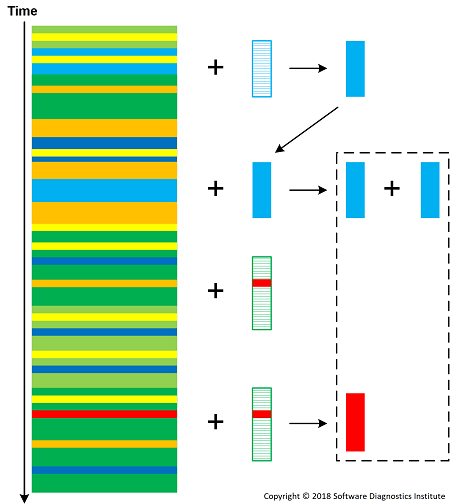

Loxel is an element of a log or software trace. It is usually a log or trace message. Usually, such messages are generated from specific code points, and, therefore, may have unique identifiers. Such UIDs can be mapped to specific colors:

For visualization purposes and 2D processing, we can collapse 1D picture into a 2D loxel image using top-to-down and left-to-right direction:

We can also include Silent Messages in the picture by imposing fixed time resolution grid:

We apply the same procedure to get 1D and 2D images:

Each loxel may contain Message Invariant and variable parts such as Message Constants and other data values such as handles, pointers, Counter Values, and other Random Data in general. We call a variable parts mexel, and mexels form layers in order of their appearance in loxels:

Therefore, for this modeling example, after loxelation and mexelation, we got 3 layers that we can use for anomaly detection via digital image processing and machine learning:

In conclusion, we would like to note that this is an artificial representation compared with the natural representation where trace memory content is used for pixel data.

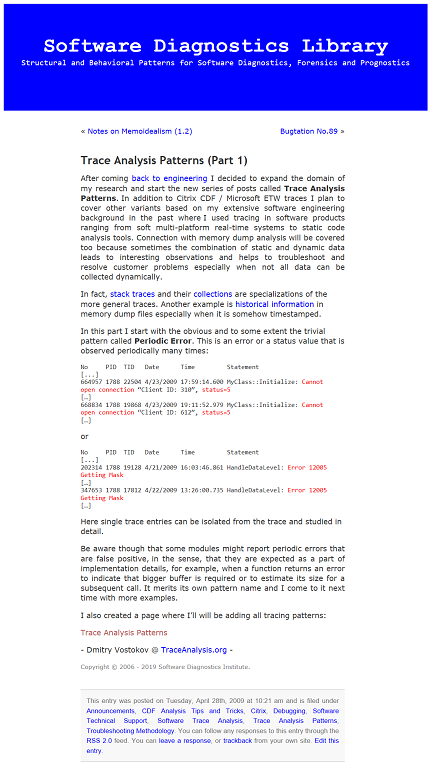

10 Years of Trace and Log Analysis Patterns

In 2009, on April 28 we introduced the new category of diagnostic analysis patterns. We reproduce the picture of that original post because it was later edited and split into an introduction and separate Periodic Error analysis pattern:

Upon the inception and its first publication, the first pattern wasn’t illustrated graphically. Such illustrations were only added later starting with the bird’s eye view in Characteristic Message Block and then taking its current shape in Activity Region analysis patterns finally culminating in Software Trace Diagrams and Dia|gram graphical diagnostic analysis language. The pictures missing in the first pattern descriptions were later added to the training course which initially served as a reference for the first 60 patterns:

When more and more patterns were added to the pattern catalog, we published an updated reference, which is now in the second edition and covers more than 130 patterns. Now there are almost 170 patterns at the time of this writing, and the new edition is forthcoming (Software Trace and Log Analysis: A Pattern Reference, Third Edition, ISBN: 978-1912636044).

Initially, trace analysis patterns lacked theoretical foundation but in less than two months Software Narratology was born (we track its history in a separate anniversary post). Later, both trace analysis and memory dump analysis were combined in a unified diagnostic analysis process, log analysis patterns became the part of pattern-driven software problem solving, and finally culminated with the birth of pattern-oriented software diagnostics. Both trace analysis patterns and Software Narratology became the foundation of malware narrative approach (malnarratives) to malware analysis. Also, the same pattern-oriented trace and analysis approach was applied to network trace analysis (see also the book) and became the foundation for pattern-oriented software forensics and Diagnostics of Things (DoT, the term that we coined). Trace analysis pattern language was proposed for performance analysis.

In addition to biochemical metaphors and artificial chemistry approaches, in the last few years we also extensively explored mathematical foundations of software trace and log analysis including topological, graph-theoretical, and category theory.

Software trace and log analysis was generalized to arbitrary event traces including memory analysis, and it became a part of pattern-oriented software data analysis.

The current frontier of research in Software Diagnostics Institute is exploration and incorporation of mechanisms and novel visualization approaches.

DiagWare: The Art and Craft of Writing Software Diagnostic Tools

The forthcoming book about designing and implementing software diagnostic tools (ISBN: 978-1912636037) has the following draft cover:

Introducing Software Pathology

Some time ago we introduced Iterative Pattern-Oriented Root Cause Analysis where we added mechanisms in addition to diagnostic checklists. Such mechanisms became the additional feature of Pattern-Oriented Software Data Analysis principles. Since medical diagnostics influenced some features of pattern-oriented software diagnostics, we found further extending medical metaphors useful. Since Pathology is the study of causes and effects, we introduce its systemic software correspondence as Software Pathology. The parts of the name “path-o-log-y” incorporate logs as artifacts and paths as certain trace and log analysis patterns such as Back Trace. We depict this relationship in the following logo:

Please also note a possible alternative category theory interpretation of “path-olog-y” using an olog approach to paths.

We, therefore, are happy to add Software Pathology as a discipline that studies mechanisms of abnormal software structure and behavior. It uses software traces and logs (and other types of software narratives) and memory snapshots (as generalized software narrative) as investigation media. Regarding the traditional computer graphics and visualization part of medical pathology, there is certain correspondence with software pathology as we demonstrated earlier that certain software defects could be visualized using native computer memory visualization techniques (the details can be found in several Memory Dump Analysis Anthology volumes).

The software pathology logo also prompted us to introduce a similar logo for Software Narratology as a “narration to log” metaphor:

Moving “y” from “Narratology” results in a true interpretation of software tracing: N-array-to-log.

Please note that the log icon in “narratology” logo part doesn’t have any abnormality indicator because a software log can be perfectly normal.

The last note to mention is that Software Pathology is different from pathology software, the same distinction applies to Software Narratology vs. narratology software, Software Diagnostics vs. diagnostics software, Software Forensics vs. forensics software, and Software Prognostics vs. prognostics software.

Pattern-Oriented Software Diagnostics, Debugging, Malware Analysis, Reversing, Log Analysis, Memory Forensics

This free eBook contains 9 sample exercises from 10 training courses developed by Software Diagnostics Services covering Windows WinDbg, Linux GDB, and Mac OS X GDB / LLDB debuggers and utilizing pattern-oriented methodology developed by Software Diagnostics Institute. The second edition was updated with the new sample exercises based on Windows 10.

Download the eBook

Memory Dump Analysis Anthology, Volume 11

The following direct links can be used to order the book:

Buy Paperback or Kindle print replica edition from Amazon

Buy Paperback from Barnes & Noble

Buy Paperback from Book Depository

Also available in PDF format from Software Diagnostics Services

This reference volume consists of revised, edited, cross-referenced, and thematically organized selected articles from Software Diagnostics Institute (DumpAnalysis.org + TraceAnalysis.org) and Software Diagnostics Library (former Crash Dump Analysis blog, DumpAnalysis.org/blog) about software diagnostics, root cause analysis, debugging, crash and hang dump analysis, software trace and log analysis written in June 2017 - November 2018 for software engineers developing and maintaining products on Windows platforms, quality assurance engineers testing software, technical support and escalation engineers dealing with complex software issues, security researchers, reverse engineers, malware and memory forensics analysts. This volume is fully cross-referenced with volumes 1 – 10 and features:

- 8 new crash dump analysis patterns with selected downloadable example memory dumps

- 15 new software trace and log analysis patterns

- Introduction to diagnostic operads

- Summary of mathematical concepts in software diagnostics and software data analysis

- Introduction to software diagnostics engineering

- Introduction to narrachain

- Introduction to diagnostics-driven development

- Principles of integral diamathics

- Introduction to meso-problem solving using meso-patterns

- Introduction to lego log analysis

- Introduction to artificial chemistry approach to software trace and log analysis

- WinDbg notes

- Updated C++17 source code of some previously published tools

- Selected entries from debugging dictionary

- List of recommended modern C++ books

- List of recommended books about algorithms

- Author's current CV

- Author's past resume written in WinDbg and GDB styles

This volume also includes articles from the former Crash Dump Analysis blog not previously available in print form.

Product information:

- Title: Memory Dump Analysis Anthology, Volume 11

- Authors: Dmitry Vostokov, Software Diagnostics Institute

- Language: English

- Product Dimensions: 22.86 x 15.24

- Paperback: 273 pages

- Publisher: OpenTask (November 2018)

- ISBN-13: 978-1-912636-11-2

Artificial Chemistry Approach to Software Trace and Log Analysis

In the past we proposed two metaphors regarding software trace and log analysis patterns (we abbreviate them as TAP):

- TAP as “genes” of software structure and behavior.

- Logs as “proteins” generated by code with TAP as patterns of “protein” structure.

We now introduce a third metaphor with strong modeling and implementation potential we are currently working on: Artificial Chemistry (AC) approach* where logs are “DNA” and log analysis is a set of reactions between logs and TAP which are individual “molecules”.

In addition to trace and logs as “macro-molecules”, we also have different molecule families of general patterns (P) and concrete patterns (C). General patterns, general analysis (L) and concrete analysis (A) patterns are also molecules (that may also be composed of patterns and analysis patterns) that may serve the role of enzymes. Here we follow the division of patterns into four types. During the reaction, a trace T is usually transformed into T’ (having a different “energy”) molecule (with a marked site to necessitate further elastic collisions to avoid duplicate analysis).

T + Pi -> T’ + Pi + Ck

T + Ci -> T’ + 2Ci

T + Li -> T’ + Li + Ck

T + Li -> T’ + Li + Ak

T + Ai -> T’ + Ai + Ak

Ci + Ck -> Ci-Ck

Cl + Cm -> Ck

Ai + Ak -> Ai-Ak

T + Ai-Ak -> T’ + Ai-Ak + Ci

…

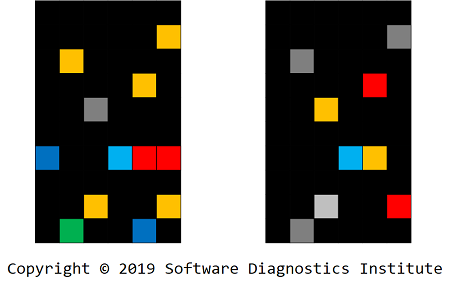

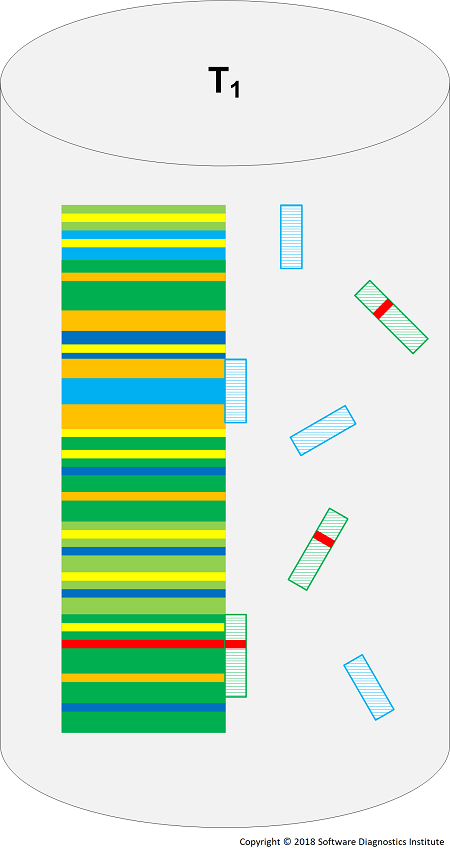

Different reactions can be dynamically specified according to a reactor algorithm. The following diagram shows a few elementary reactions:

Concentrations of patterns (reaction educts) increases the chances of producing reaction products according to corresponding reaction "mass action". We can also introduce pattern consuming reactions such as T + Li -> T' + Ck but this requires the constant supply of analysis pattern molecules. Intermediate molecules may react with a log as well and be a part of analysis construction (second order trace and log).

Since traces and logs can be enormous, such reactions can occur randomly according to the Brownian motion of molecules. The reactor algorithm can also use Trace Sharding.

Some reactions may catalyze log transformation into a secondary structure with certain TAP molecules now binding to log sites. Alternatively, we can use different types of reactors, for example, well stirred or topologically arranged. We visualize a reactor for the reactions shown in the diagram above:

We can also add reactions that split and concatenate traces based on collision with certain patterns and reactions between different logs.

Many AC reactions are unpredictable and may uncover emergent novelty that can be missed during the traditional pattern matching and rule-based techniques.

The AC approach also allows simulations of various pattern and reaction sets independently of concrete traces and logs to find the best analysis approaches.

In addition to software trace and log analysis of traditional software execution artifacts, the same AC approach can be applied to malware analysis, network trace analysis and pattern-oriented software data analysis in general.

* Artificial Chemistries by Wolfgang Banzhaf and Lidia Yamamoto (ISBN: 978-0262029438)

Lego Log Analysis

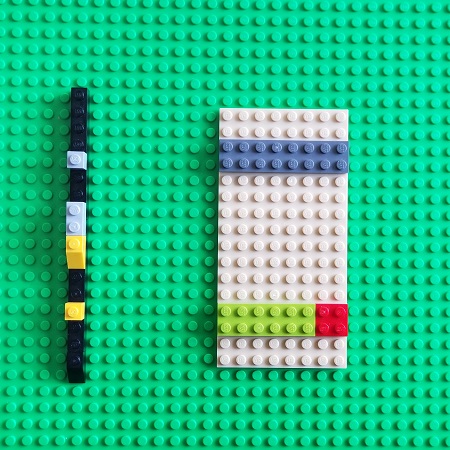

In addition to Dia|gram graphical diagnostic analysis language, we use to illustrate trace and log analysis patterns we introduce a Lego-block approach. A typical software log is illustrated in this picture with Lego blocks of different colors corresponding to different trace message types, Motifs, activities, components, processes or threads depending on an analysis pattern:

For a starter, we illustrate 3 very common error message patterns (red blocks). The illustration of Error Message shows different types of error data visualizations:

The two illustrations of Periodic Error pattern show typical Error Distribution patterns:

We plan to add more such illustrations in the future to this online article and also include them in the forthcoming Memory Dump Analysis Anthology volumes (starting from volume 11).

Meso-problem Solving using Meso-patterns

Meso-problems are software design and development problems that require short hard-limited time to solve satisfactorily with good quality. The time limit is usually not more than an hour. The prefix meso- means intermediate. These meso-problems are distinct from normal software design problems (macro-problems) which require much more time to solve and implementation idioms (micro-problems) that are usually implementation language-specific. In contrast to macro-problems where final solutions are accompanied by software documentation and micro-problems solved without any documentation except brief source code comments, meso-problem solutions include a specific narrative outlining the solution process with elements of theatrical performance. In a satisfactory meso-problem solution such a narrative dominates actual technical solution, for example, code.

Meso-problems are solved with the help of Meso-patterns: general solutions with accompanying narrative applied in specific contexts to common recurrent meso-problems. Since problem-solving time is limited the solutions may not be optimal, extendable, and maintainable as real-world solutions for similar (macro-)problems. The accompanying narrative should mention such differences.

We should not confuse meso-patterns with elemental design patterns*, elementary building blocks of conventional design patterns. Such patterns and their building blocks can be a part of meso-patterns’ solutions and narratives.

Typically, meso-problem solving occurs during technical interviews. However, it can also be a part of code and design reviews, mentoring and coaching.

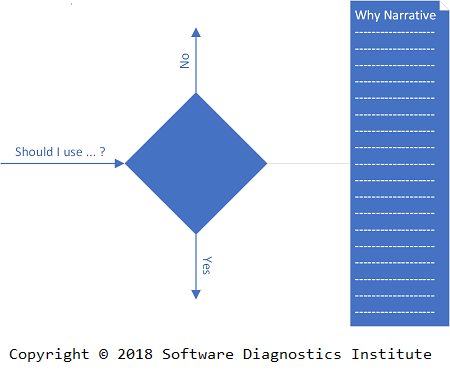

Whereas general patterns and specific idioms address the questions of What and How, meso-patterns also address the Why question.

Because the Why narrative is an integral part of Meso-patterns they can be applied to homework interview programming problems as well (even when they are not Meso-problems). In such a case it is recommended to embed Why narratives in source code comments. Such narratives are not necessary for programming contests and online coding sessions when solutions are checked automatically. However, it is advised to duplicate essential narrative parts in code comments in case the code is forwarded to other team members for their assessment, even if an interviewer is present during the online coding session.

The first general Meso-pattern we propose is called Dilemma (see dilemma definition). Dilemma problems arise at almost every point of a technical interview and need to be solved. They also happen in software design and development, but their solutions are not usually accompanied by explicitly articulated narratives outlining various alternatives and their pro and contra arguments (except in good books teaching computer science and software engineering problem solving). Time constraints are not overly fixed and can be adjusted if necessary. The documentation contains only final decisions. In contrast, during technical interviews when we have dilemmas we need to articulate them aloud, outline alternative solutions considering various hints from interviewers while asking questions during the problem-solving process. The dilemma problem-solving narrative is as much important as the written diagram, code or pseudo-code, and can compensate for the incomplete solution code if it is obvious from the narrative that an interviewee would have finished writing solution code if given more time.

Dilemma meso-problems also happen during design and code review discussions as stakeholders must defend their decisions.

It is important to narrate every Dilemma as the failure to do so may result in a wrong perception, downgraded and even rejected solution. For example, even the simple act of choosing a particular naming convention needs to be articulated, making an interviewer aware of interviewee’s knowledge of coding standards and experience with programming styles dominant on various platforms.

We are building a catalogue of Meso-patterns and publish them one by one in subsequent articles with examples.

* Jason McC. Smith, Elemental Design Patterns (ISBN: 978-0321711922)

Dump2Picture 2

11 years ago, we introduced static natural memory visualization technique according to our memory visualization tool classification. The program we wrote appended BMP file header at the beginning of a DMP file (the source code was published in Memory Dump Analysis Anthology, Volume 2). However, it had the limitation of 4GB BMP image file format which we followed strictly. Because of that, we switched to other image processing tools that allow interpretation of memory as a RAW picture (see Large-scale Structure of Memory Space). Recently, some readers of Memory Dump Analysis Anthology, researchers, and memory visualization enthusiasts asked me for the updated version that can handle memory dumps bigger than 4GB. To allow bigger files, we used the workaround (which we plan to add to our Workaround Patterns catalog) by ignoring the file size structure fields for file sizes higher than 4GB. Some image file viewers ignore these fields (we used IrfanView 64-bit for testing). We took the opportunity to use the latest C++17 standard while refactoring the Windows legacy source code.

The full source code and Visual Studio 17 solution with built Release x64 executable can be found here: https://bitbucket.org/softwarediagnostics/dump2picture

Below are some images we produced.

The picture of the memory dump used in Hyperdump memory analysis pattern:

The picture of the complete 16GB memory dump saved after system start:

The picture of the complete 16GB memory dump saved after a few days of system work:

Integral Diamathics – Tracing the Road to Root Cause

Recently we noticed a published book about biology and mathematics (with some emphasis on category theory) called “Integral Biomathics: Tracing the Road to Reality” (ISBN: 978-3642429606). We liked that naming idea because we are interested in applying category theory to software diagnostics (and diagnostics in general). Our road started more than a decade ago after reading “Life Itself: A Comprehensive Inquiry Into the Nature, Origin, and Fabrication of Life” by Robert Rosen (ISBN: 978-0231075657) recommended in “Categories for Software Engineering” by Jose Luiz Fiadeiro (ISBN: 978-3540373469). We also read “Memory Evolutive Systems: Hierarchy, Emergence, Cognition” book (ISBN: 978-0444522443) written by one of the editors and contributors to “Integral Biomathics” (Andrée C. Ehresmann) and the semi-popular overview of contemporary physics “The Road to Reality” (ISBN: 978-0679454434) by Roger Penrose. Certainly, the editors of “Integral Biomathics” wanted to combine biology, mathematics, and physics into one integral whole. Something we also wanted to do for memory analysis and forensics intelligence (unpublished “Memory Analysis Forensics and Intelligence: An Integral Approach” ISBN: 978-1906717056) planned before we started our work on software trace analysis patterns and software narratology. Our subsequent research borrowed a lot of terminology and concepts from contemporary mathematics.

As a result, we recognized the need to name diagnostic mathematics as Diamathics, and its Integral Diamathics version subtitled as “The Road to Root Cause” since we believe that diagnostics is an integral part of root cause analysis as analysis of analysis. To mark the birth of Diamathics we created a logo for it:

In its design, we used the sign of an indefinite integral and diagnostic components from Software Diagnostics Institute logo (also featured on “Theoretical Software Diagnostics” book front cover). The orientation of UML components points to past (forensics) and future (prognostics) and reflects our motto: Pattern-Oriented Software Diagnostics, Forensics, Prognostics (with subsequent Root Cause Analysis and Debugging).

Diagnostics-Driven Development (Part 1)

Bugs are inevitable in software during its construction. Even, if good coding practices such as test-driven development, checklists for writing effective code, and using well-tested standards-based libraries instead of crafting your own eliminate non-functional defects such as resource leaks and crashes, functional defects are there to stay. On the other hand, if test cases show that functional requirements are met, some non-functional defects such as leaks may evade detection and manifest themselves during later phases of development. Therefore, it is vital to start diagnosing all kinds of software defects as earlier as possible. Here, pattern-oriented software diagnostics may help by providing problem patterns (what to look for), and analysis patterns (how to look for) for different types of software execution artifacts such as memory dumps and software logs. The following two best practices we found useful during the development of various software over the last 15 years:

- Periodic memory dump analysis of processes. Such analysis can be done offline after a process finished its execution or just-in-time by attaching a debugger to it.

- Adding trace statements as earlier as possible for checking various conditions, the correct order of execution, and the state. Such Declarative Trace allows earlier application of pattern-oriented trace and log analysis. Typical analysis patterns at this stage of software construction include Significant Events, Event Sequence Order, Data Flow, State Dump, and Counter Value.

We plan to explain this proposed software development process further and provide practical examples (with source code) in the next parts.

Narrachain

Narrachain is an application of blockchain technology to software narratives, stories of computation, such as traces and logs including generalized traces such as memory dumps. Based on Software Narratology Square it also covers software construction narratives and, more generally, graphs (trees) of software narratives.

In case of software traces and logs, a blockchain-based software narrative may be implemented by adding an additional distributed trace that records the hash of a message block together with the hash of a previous block (a hash chain). This is depicted in the following diagram where Palimpsest Message appeared after the software narrative was growing for some time:

Performance considerations may affect the size of message blocks.

Narrachains can be used to prevent malnarratives and prove the integrity of software execution artifacts. The novel approach here is an integration of such a technology into a system of diagnostic analysis patterns (for example, problem description analysis patterns, trace and log analysis patterns, memory analysis patterns, unified debugging patterns). Narrascope, a narrative debugger, developed by Software Diagnostics Services, will include the support for NarraChain trace and log analysis pattern as well.

Narrachains can also be used for maintaining integrity of software support workflows by tracking problem information and its changes. For example, changes in problem description or newly found diagnostic indicators trigger invalidation of diagnostic analysis reports and re-evaluation of troubleshooting suggestions.

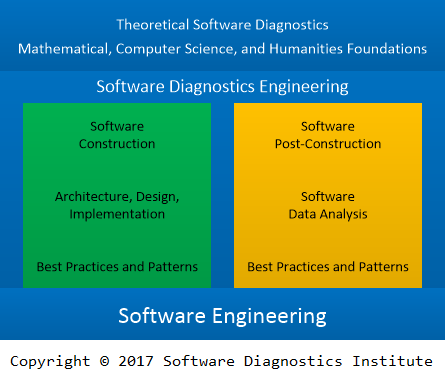

Software Diagnostics Engineering